Description

Vault is a very useful tool to store sensitive data in secure way. To get the data is necessary to pass thorough authentication process. Applications can get credential and certificates to DB, internal and external services, file storages etc. In addition Voult can encrypt data which could be store for example in DB (this case won't be checked in this post).

In our common case we prepare simple application to grab sensitive information. We only put that data to the logger to check solution.

The Solution

Volt

Basic Vault server configuration is described at: https://spring.io/guides/gs/vault-config/. There exists important information such as Java version or path to the sources (https://www.vaultproject.io/downloads). It is recommended to add voult's path to the system variables.

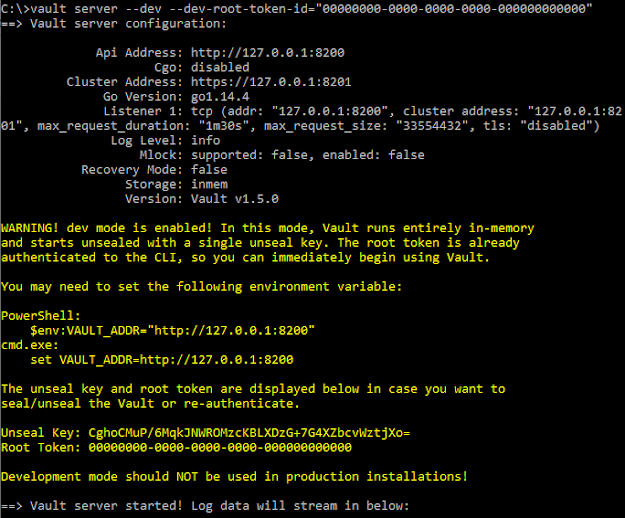

Lets start the Vault server:

vault server --dev --dev-root-token-id="00000000-0000-0000-0000-000000000000"

Next lets add secrets:

vault kv put secret/artsci-vault-config artsci.username=artsciUser artsci.password=artsciPass

The same result we can see in web browser (http://localhost:8200/). It is necessary to use token we defined at the beginning

(00000000-0000-0000-0000-000000000000)

Then select 'secret' path:

And finally we can see previously created secret element.

As You can see everything is correct. You can manage this item. You can create new version or delete this item.

Spring boot application

I created new application with configuration. Very important is bootstrap.properties file. That configuration is loaded at the beginning.

pom.xml

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 https://maven.apache.org/xsd/maven-4.0.0.xsd"><modelVersion>4.0.0</modelVersion><groupId>com.artsci</groupId><artifactId>artsciVoultSpring</artifactId><version>0.0.1-SNAPSHOT</version><name>Voult client </name><parent><groupId>org.springframework.boot</groupId><artifactId>spring-boot-starter-parent</artifactId><version>2.2.1.RELEASE</version></parent><dependencies><!-- Vault Starter --><dependency><groupId>org.springframework.cloud</groupId><artifactId>spring-cloud-starter-vault-config</artifactId></dependency><dependency><groupId>org.springframework.boot</groupId><artifactId>spring-boot-starter-test</artifactId><scope>test</scope></dependency><dependency><groupId>org.projectlombok</groupId><artifactId>lombok</artifactId><version>1.18.12</version><scope>provided</scope></dependency><dependency><groupId>org.slf4j</groupId><artifactId>slf4j-simple</artifactId><version>1.8.0-beta4</version></dependency></dependencies><dependencyManagement><dependencies><dependency><groupId>org.springframework.cloud</groupId><artifactId>spring-cloud-dependencies</artifactId><version>${spring-cloud.version}</version><type>pom</type><scope>import</scope></dependency></dependencies></dependencyManagement><properties><java.version>1.8</java.version><spring-cloud.version>Greenwich.SR2</spring-cloud.version></properties><build><plugins><plugin><groupId>org.springframework.boot</groupId><artifactId>spring-boot-maven-plugin</artifactId></plugin></plugins></build><pluginRepositories><pluginRepository><id>central</id><name>Central Repository</name><url>https://repo.maven.apache.org/maven2</url><layout>default</layout><snapshots><enabled>false</enabled></snapshots><releases><updatePolicy>never</updatePolicy></releases></pluginRepository></pluginRepositories><repositories><repository><id>central</id><name>Central Repository</name><url>https://repo.maven.apache.org/maven2</url><layout>default</layout><snapshots><enabled>false</enabled></snapshots></repository></repositories></project>

bootstrap.properties

spring.application.name=artsci-vault-configspring.cloud.vault.uri=http://localhost:8200spring.cloud.vault.token=00000000-0000-0000-0000-000000000000spring.cloud.vault.scheme=httpspring.cloud.vault.kv.enabled=true

VoltVariables .class

package artsciVoultSpring;import org.springframework.boot.context.properties.ConfigurationProperties;import lombok.Data;@ConfigurationProperties("artsci")@Datapublic class VoltVariables {private String username;private String password;}

ArtsciSpringVoultApp

package artsciVoultSpring;import org.slf4j.Logger;import org.slf4j.LoggerFactory;import org.springframework.boot.CommandLineRunner;import org.springframework.boot.SpringApplication;import org.springframework.boot.autoconfigure.SpringBootApplication;import org.springframework.boot.context.properties.EnableConfigurationProperties;@SpringBootApplication@EnableConfigurationProperties(VoltVariables.class)public class ArtsciSpringVoultApp implements CommandLineRunner {private final VoltVariables voltVariables;public ArtsciSpringVoultApp (VoltVariables voltVariables) {this.voltVariables = voltVariables;}public static void main(String[] args) {SpringApplication.run(ArtsciSpringVoultApp.class, args);}public void run(String... args) {Logger logger = LoggerFactory.getLogger(ArtsciSpringVoultApp.class);logger.info("----------------------------------------");logger.info("Configuration properties");logger.info("Username: {}", voltVariables.getUsername());logger.info("Password: {}", voltVariables.getPassword());logger.info("----------------------------------------");}}

The Results

At the end we can compare properties in Voult and in application logs

So everything looks good. Variables are exactly the same :)